This isn't about your computer's (clock) speed, it is about the way your computer tells the time. When you are using the internet, what you see on your computer's screen is a "Browser Window" You are therefore using SUN JavaScript, and JavaScript to display the time.

See Oracle and Delphi

The method JavaScript uses to calculate the time is copyright.

JavaScript (your web browser) has software which counts the milliseconds (1/1,000ths of a second) since 1st January 1970.

<script type="text/javascript">

var d = new Date();

document.write(d.getTime() + " milliseconds since 1970/01/01");

</script>

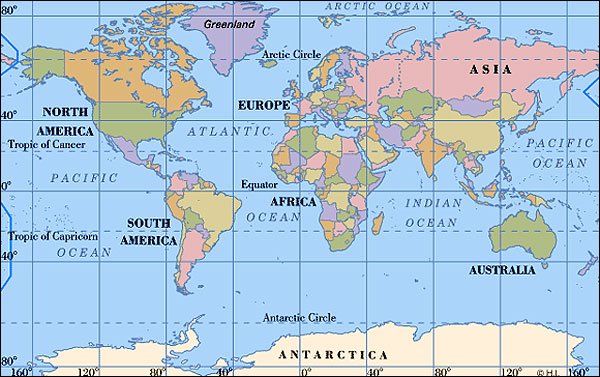

Your computer has to synchronise the clock with something external, so you set the time to GMT or to 000:00' New Earth Time or your local time. Local time is corrected daily by the radio 'time pips' at noon. If you are listening on the internet there is an 18 second delay.

Why is your computer's clock showing a different time from your cell phone and the rest of the world?

Read the blog on the next page.

This would possibly explain why the clock is one second out, but it wouldn't explain a whole minute or more.

What else is wrong with Microsoft's timing system?

<script type="text/javascript">

var d = new Date();

document.write(d.getFullYear());

</script>

Daylight savings time is pre-programmed and cannot be changed. I'm not sure how your cell phone does this, but it probably copied some corrupted source code. A cell phone, by default does not have to use JavaScript, however it must do so if it onnects to the 'Internet', or uses 'broadband' or 'JetStream'.

Your wrist watch if you still use one, is capable of correction, so if it is running fast, and you correct, it will try to make an adjustment for the actual time. This may not be possible if real time is about 20 minutes a day longer than what the watch manufacturer calculated as the likely range. If it continues to race ahead, return it and ask for a refund.

How do I know the real time?

You make an observation on the sun. Naturally it is no good if you know your watch is right, but it is saying 4.00pm when the sun is directly overhead.

In New Zealand for instance, official Noon, or midday is 20 minutes past when the sun is directly overhead. This is because the world is split up into 24 time zones which roughly match when the sun is overhead in any one of them. People who navigate from the sun need to know which time zone they are in to correct time by adding or subtracting a number of hours from GMT (Greenwich Mean Time). By observing when the sun is actually directly overhead, they can calculate a position if they know what the time is on the GMT line.

An atomic clock is a clock that uses an electronic transition frequency in the microwave, optical, or ultraviolet region[2] of the electromagnetic spectrum of atoms as a frequency standard for its timekeeping element. Atomic clocks are the most accurate time and frequency standards known, and are used as primary standards for international time distribution services, to control the wave frequency of television broadcasts, and in global navigation satellite systems such as GPS.

The principle of operation of an atomic clock is not based on nuclear physics, but rather on atomic physics and using the microwave signal that electrons in atoms emit when they change energy levels. Early atomic clocks were based on masers at room temperature. Currently, the most accurate atomic clocks first cool the atoms to near absolute zero temperature by slowing them with lasers and probing them in atomic fountains in a microwave-filled cavity. An example of this is the NIST-F1 atomic clock, the U.S. national primary time and frequency standard.

<td width="100%">

<select name="city" size="1" onchange="updateclock(this);">

<option value="" selected>Local time</option>

<option value="0">London GMT</option>

<option value="1">Rome</option>

<option value="7">Bangkok</option>

<option value="8">Hong Kong</option>

<option value="9">Tokyo</option>

<option value="10">Sydney</option>

<option value="12">Fiji</option>

<option value="-10">Hawaii</option>

<option value="-8">San Francisco</option>

<option value="-5">New York</option>

<option value="-3">Buenos Aires</option>

</select>

Countdown to Friday 21st December 2012, 11.11 am (New year's day on the Mayan calendar)

"Great Cycle" of 1,872,000 days, ( 5200 Tuns, or about 5125 yearshttp://science.nasa.gov/science-news/science-at-nasa/2001/ast15feb_1/

The first self-winding wristwatch did not appear until after World War I, when wristwatches became popular. It was invented by a watch repairer from the Isle of Man named John Harwood in 1923,[10] who took out a UK patent with his financial backer, Harry Cutts, on 7 July 1923, and a corresponding Swiss patent on 16 October 1923.

The Rolex Watch Company improved Harwood's design in 1930 and used it as the basis for the Rolex Oyster Perpetual, in which the centrally mounted semi-circular weight could rotate through a full 360° rather than the 300° of the 'bumper' winder. Rolex's version also increased the amount of energy stored in the mainspring, allowing it to run autonomously for up to 35 hours.

Google smears time: Play the audio above; (@14:40)

http://www.radionz.co.nz/national/programmes/ninetonoon

- New Technology with Donald Clark

Microsoft provides a sneak preview of next version of Windows; Google smears time; and coconuts and sunshine to power Tokelau. (22′53″)

- Download: Ogg Vorbis MP3 | Embed

11:05 New technology with Donald Clark

Microsoft provides a sneak preview of next version of Windows; Google smears time; and coconuts and sunshine to power Tokelau (pdf)

You can find the current local time for any city here:

Calculate the number of years since 1970/01/01:

<script type="text/javascript">var minutes=1000*60;

var hours=minutes*60;

var days=hours*24;

var years=days*365;

var d=new Date();

var t=d.getTime();

var y=t/years;

document.write("It's been " + Math.round(y) + " years since 1970/01/01!");

</script>

http://www.javascriptkit.com/script/script2/dropworldclock.shtml

Just open your web page editor, drag the HTML text editor onto yor page, and copy and paste the code from this script.

You will need to alter the values to get the correct time.

Return the hour, according to local time:

<script type="text/javascript">var d = new Date();

document.write(d.getHours());

</script>

The time shown in the original display Sydney showed the wrong time with respect to Auckland, local time, so I replaced the word Sydney witht Sidnee and set the value to 15 instead of "10"

Tokyo is about half a time zone to the left of Sydney, so I set the value to one less than Sydney.

You can add a city by:

<option value="11">Hong Kong</option>copy and paste this line into the code oppisite (in your HTML editor)

Replace the word >Hong Kong< with your new city.

Replace the value "11" with the value for that city.

You may have to experiment. Find out what the correct time is by going to the web site above.

Put is a number, say -10 of from a city near where your city is. Increase or decrease that number until your clock shows the correct time.

<option value="6">London GMT</option>

<option value="7">Rome</option>

<option value="-12">Bangkok</option>

<option value="-11">Hong Kong</option>

<option value="-10">Tokyo</option>

<option value="-9">Sydnee</option>

<option value="-11">Perth</option>

<option value="-7">Fiji</option>

<option value="-5">Hawaii</option>

<option value="-7">Auckland</option>

<option value="1">New York</option>

<option value="-10">Buenos Aires</option>

</select>

Why is music filed under bananas?

The way to convert NET time to GMT is to divide by 15 (15 degrees to the hour-360 degrees per day) but the starting point 000:00' has shifted from Greenwich by 5 hours since this was written by four Courtville (Auckland) residents, so the method no longer works.Divide by 15 (24=0) and add 6 (hours).

There is a problem

Current time (cell phone time) is:

+1 minute 1/September 2011

(ahead of internet time-

Check your cell phone against your computer

Internet Time (IT) NZT (DST) NET

Wed 25th September 2011 4:58:37 6.00 358:44'

5:00:00 6.01 00:00'

Friday 30th September 6.00

00:00'

12:00:00 Midnight 30th Sept. 12.00:00

(Why does your computer show a different time-)

The explaination

Read the article: Google Smears Time

How does this affect time?

Light travels at a constant speed.

Einstein said that as the speed (of a mass) increases, time slows. What happens when a gaxaxy expands -as it does as it travels in space? If the bodies revolving around the centre of the galaxy (solar systems) continue to travel at the same speed, time does not increase. The planets of a solar system may continue at the same velocity also, taking one year to complete one revolution.

How do we measure time?

If a second is a finite fraction of the time it takes the sun to complete a revolutinof the sun (a year) defined as 365.25 days, divided into 24 hours, 60 minutes per hour, 60 seconds per minute, then there is no change.

What if we count the 1,000/ths of a second, and add them up (count them) to make minutes, hours and days? That is how the computer calculates time.

So what is going wrong?

There are a couple of possible answers.

One is that the original software copied onto the cell phone was faulty,and nobody knew, or it was a deliberate mistake.

Why does the sun agree with cell phone time then?

Does it? We calculate time from an atomic clock. An atomic clock in not a nuclear clock, just as an atom is not a nucleus. The atomic clock puts out a regular number of beats, but these do not necessarily correspond to the position of the sun. At noon the sun is overhead. We presume the sun wobbles a little, (or the earth does) and its position may not be reliable.

How will we know who is right?

If the computer's clock is slowing down, and we are expecting this, we know that sooner or later our mechanical watches will start gaining time. In fact they will be keeping the same time they always did, and we can adjust them to slow them, but there are limits (a range) which the manufacturer has built in to them They may be able to cope with a minute a month, but not 20 minutes. We may have to return them to the manufacturer or throw them away.

We are presuming the sun will keep pace with the slowing time, and the year will take the same time, even though the individual seconds may be longer. Daylight saving time has been pre-programmed into teh computer and cannot be changed, which is why my clock is showing 5:00pm (4:58) when the country is already watching the 6:00 news on television. Daylight savings time will correct itself, just as my cell phone did. It will not however explain the difference between my cell phone and my computer.

The clock rate is the rate in cycles per second (measured in hertz) or the frequency of the clock in any synchronous circuit, such as a central processing unit (CPU). The clock period is measured in time units (not cycles) and is the time between successive cycles.

For example, a crystal oscillator frequency reference typically is synonymous with a fixed sinusoidal waveform, a clock rate is that frequency reference translated by electronic circuitry (AD Converter) into a corresponding square wave pulse [typically] pr sampling rate for digital electronics applications. In this context the use of the word, speed (physical movement), should not be confused with frequency or its corresponding clock rate. Thus, the term "clock speed" is a misnomer.

A single clock cycle (typically shorter than a nanosecond in modern non-embedded microprocessors) toggles between a logical zero and a logical one state[dubious – discuss].

CPU manufacturers typically charge premium prices for CPUs that operate at higher clock rates, a practice called binning. For a given CPU, the clock rates are determined at the end of the manufacturing process through actual testing of each CPU. CPUs that are tested as complying with a given set of standards may be labeled with a higher clock rate, e.g., 1.50 GHz, while those that fail the standards of the higher clock rate yet pass the standards of a lesser clock rate may be labeled with the lesser clock rate, e.g., 1.33 GHz, and sold at a lower price.[1] [2]

The clock of a CPU is normally determined by the frequency of an oscillator crystal. The first commercial PC, the Altair 8800 (by MITS), used an Intel 8080 CPU with a clock rate of 2 MHz (2 million cycles/second). The original IBM PC (c. 1981) had a clock rate of 4.77 MHz (4,772,727 cycles/second). In 1995, Intel's P5 Pentium chip ran at 100 MHz (100 million cycles/second), and in 2002, an Intel Pentium 4 model was introduced as the first CPU with a clock rate of 3 GHz (three billion cycles/second corresponding to ~0.3 10−9seconds per cycle).

Clock rate

The clock rate is the speed at which a microprocessor executes instructions. Every computer contains an internal clock that regulates the rate at which instructions are executed and synchronizes all the various computer components. The CPU requires a fixed number of clock ticks (or clock cycles) to execute each instruction. The faster the clock, the more instructions the CPU can execute per second.

Most CPUs, and indeed most sequential logic devices, are synchronous in nature.[10] That is, they are designed and operate on assumptions about a synchronization signal. This signal, known as a clock signal, usually takes the form of a periodic square wave. By calculating the maximum time that electrical signals can move in various branches of a CPU's many circuits, the designers can select an appropriate period for the clock signal.

This period must be longer than the amount of time it takes for a signal to move, or propagate, in the worst-case scenario. In setting the clock period to a value well above the worst-case propagation delay, it is possible to design the entire CPU and the way it moves data around the "edges" of the rising and falling clock signal. This has the advantage of simplifying the CPU significantly, both from a design perspective and a component-count perspective. However, it also carries the disadvantage that the entire CPU must wait on its slowest elements, even though some portions of it are much faster. This limitation has largely been compensated for by various methods of increasing CPU parallelism. (see below)

However, architectural improvements alone do not solve all of the drawbacks of globally synchronous CPUs. For example, a clock signal is subject to the delays of any other electrical signal. Higher clock rates in increasingly complex CPUs make it more difficult to keep the clock signal in phase (synchronized) throughout the entire unit. This has led many modern CPUs to require multiple identical clock signals to be provided in order to avoid delaying a single signal significantly enough to cause the CPU to malfunction. Another major issue as clock rates increase dramatically is the amount of heat that is dissipated by the CPU. The constantly changing clock causes many components to switch regardless of whether they are being used at that time. In general, a component that is switching uses more energy than an element in a static state. Therefore, as clock rate increases, so does heat dissipation, causing the CPU to require more effective cooling solutions.

Below is the code on http://www.javascriptkit.com/script/script2/dropworldclock.shtml web site PAGE 2